K-means Clustering via Principal Component Analysis

advertisement

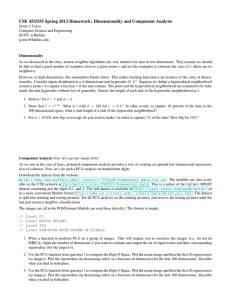

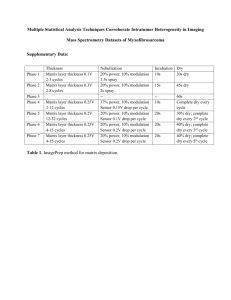

K-means Clustering via Principal Component Analysis According to the paper by Chris Ding and Xiaofeng He from Int’l Conf. Machine Learning, Banff, Canada, 2004 1 Traditional K-means Clustering Minimizing the sum of squared errors K JK (x i m k ) 2 k 1 i C k Where data matrix x i ( x1 , , x d ) X ( x1 , , x n ) Centroid of cluster Ck m k 1 nk xi nk is the number of points in Ck i C k 2 Principal Component Analysis (PCA) Centered data matrix Y ( y 1 , , y n ), y i x i x, x 1 n n xi i 1 Covariance matrix 1 n 1 YY T (x n 1 i x )( x i x ) T i 1 1 Factor 1 n n 1 is ignored 3 PCA - continuation Eigenvalues and eigenvectors YY u k k u k , T Y Y v k k v k , T v k Y u k / k T 1/ 2 Singular value decomposition (SVD) Y 1/ 2 k ukv T k k 4 PCA - example 5 K-means → PCA nk Indikator vectors h ( 0 , , 0 , 1, ,1, 0 , , 0 ) T / n 1 / 2 k k H K (h 1 , , h K ) Criterion J K Tr( X X ) Tr( H X XH T T K T K ) Linear transform by K × K orthonormal matrix T Q k (q 1 , , q K ) H K T Last column of T t K ( n1 / n , , n1 / n ) T 6 K-means → PCA - continuation n1 qK Criterion J K Tr( Y Y ) Tr( Q K 1Y YQ K 1 ) n T hK 1 Therefore n h1 nK T e n T Optimization becomes T T max Tr( Q K 1Y YQ K 1 ) Q K 1 Solution is first K-1 principal components Q k ( v 1 , , v K 1 ) 7 PCA → K-means Clustering by PCA C ee / n T K 1 k 1 K vkvk T K q kq k T k 1 T h kh k k 1 Probability of connectivity between i and j p ij c ij c 1/ 2 ii c 1/ 2 jj 0, p ij 1 , 0 1, if p ij if p ij usually 0.5 8 9 10 11 12 13 14 15 16 Eigenvalues • 1. case 164030, 58, 5 • 2. case 212920, 1892, 157 17 18 19 Thank you for your attention 20

![See our handout on Classroom Access Personnel [doc]](http://s3.studylib.net/store/data/007033314_1-354ad15753436b5c05a8b4105c194a96-300x300.png)